Artificial intelligence looks like software.

In reality, it is a hardware problem disguised as intelligence.

Behind every impressive AI model — every chatbot, image generator, and recommendation engine — sits an infrastructure problem that has nothing to do with clever prompts or smarter algorithms. It has everything to do with how fast data can move inside a machine.

At the center of this problem is something most people outside engineering rarely hear about: HBM memory.

Without it, modern AI does not scale.

Not slowly.

Not inefficiently.

It simply doesn’t scale at all.

The Hidden Bottleneck Behind AI Progress

When people talk about AI performance, they usually focus on visible metrics:

- Model size

- Number of parameters

- GPUs and accelerators

- Training time

Almost no one talks about memory bandwidth — even though it often determines whether a system performs well or collapses under its own weight.

AI models don’t just compute.

They move enormous amounts of data, continuously.

Every layer of a neural network requires weights to be fetched, activations to be stored, and intermediate results to be reused. This happens billions of times during training and inference.

Opinion:

In AI, computation is cheap.

Data movement is the real tax.

If data cannot reach the processor fast enough, the processor waits. And when that processor costs tens of thousands of dollars, waiting is not an option.

What Exactly Is HBM Memory?

HBM stands for High Bandwidth Memory. It is a specialized type of memory designed to deliver extremely high data throughput while using less power than traditional memory technologies.

Unlike conventional DRAM, HBM:

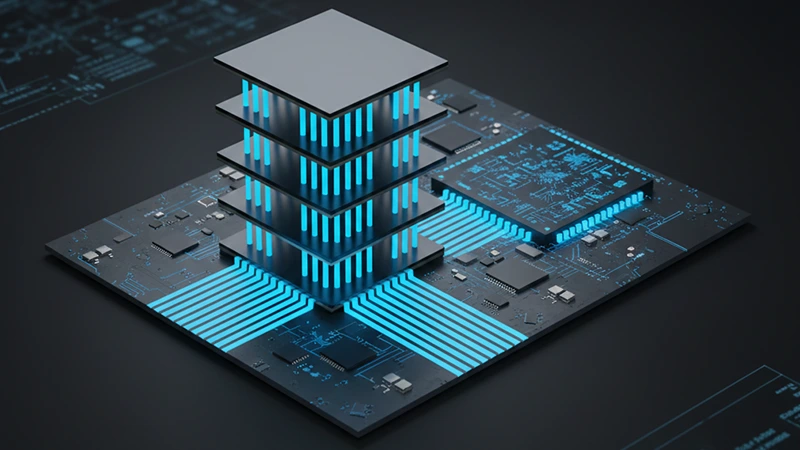

- Is stacked vertically instead of spread flat

- Sits physically close to the processor

- Uses extremely wide memory buses

- Prioritizes throughput over clock speed

A useful way to think about it is this:

Traditional memory tries to go faster.

HBM tries to go wider.

Instead of pushing data through a narrow pipe at very high speed, HBM moves massive amounts of data through many lanes simultaneously.

How HBM Is Physically Different From Traditional RAM

Standard system memory (DDR4 or DDR5) lives on separate modules, connected to the CPU or GPU through relatively long traces on a motherboard. That distance matters.

HBM changes the layout entirely.

It uses:

- 3D-stacked memory dies

- Through-Silicon Vias (TSVs) to connect layers

- Advanced packaging that places memory next to the processor

This drastically shortens the distance data must travel.

Less distance means:

- Lower latency

- Higher bandwidth

- Less power wasted moving bits

But it also introduces new challenges — manufacturing complexity, yield loss, and cost.

Why Traditional RAM Is Not Enough for AI

DDR memory was designed for a very different world.

It works well for:

- CPUs

- Sequential tasks

- General-purpose workloads

- Latency-sensitive but bandwidth-light operations

AI workloads behave differently.

They require:

- Massive parallel access

- Constant streaming of model weights

- High sustained bandwidth

- Predictable data flow

If memory cannot feed the GPU fast enough, the GPU stalls. And when a single AI GPU can cost more than an entire server rack used to, idle time becomes unacceptable.

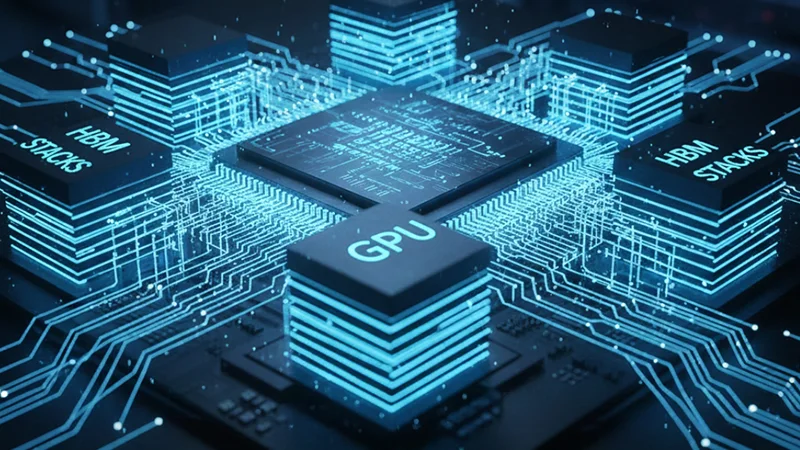

Why GPUs Need HBM Specifically

Modern AI accelerators rely on extreme parallelism:

- Thousands of compute cores

- Tens of thousands of threads

- Continuous execution pipelines

HBM solves three critical problems at once:

1. Bandwidth

HBM delivers hundreds to thousands of gigabytes per second of bandwidth — far beyond what standard memory can provide.

2. Latency

Because HBM sits next to the processor, access times drop significantly.

3. Energy Efficiency

Moving data consumes power. HBM moves more data using less energy per bit.

This is why:

- NVIDIA’s H100 uses HBM3

- AMD’s MI300 uses HBM3

- Future AI accelerators depend almost entirely on HBM evolution

Opinion:

No HBM, no large-scale AI. Period.

The Evolution: HBM1 to HBM3 (and Beyond)

HBM didn’t appear overnight.

Each generation solved real limitations:

- HBM1: Proved stacked memory was viable

- HBM2: Increased capacity and bandwidth

- HBM2e: Improved speeds for early AI scaling

- HBM3: Designed explicitly for modern AI workloads

HBM3 dramatically increases:

- Bandwidth per stack

- Total memory capacity per package

- Power efficiency

Future versions (HBM3e and beyond) aim to support even larger models and higher inference throughput — not by brute force, but by smarter data movement.

Why Stacking Memory Changes Everything

HBM uses vertical stacking, which fundamentally alters how memory behaves.

Memory dies are stacked on top of each other and connected using microscopic vertical channels. These stacks are then placed beside the GPU on the same package.

This architecture:

- Shrinks physical distance

- Allows massive parallel access

- Reduces energy loss

But it also introduces trade-offs:

- Manufacturing complexity increases

- Yields decrease

- Costs rise sharply

Opinion:

HBM is expensive because physics is expensive.

The Supply Chain Nobody Talks About

HBM is not just a technical challenge.

It is an industrial bottleneck.

Only a handful of companies can manufacture HBM at scale:

- SK Hynix (current market leader)

- Samsung

- Micron

Demand for AI exploded faster than memory manufacturing could adapt.

The result:

- Severe supply constraints

- Long lead times

- Price pressure across the entire AI ecosystem

This bottleneck explains why:

- AI chips remain scarce

- Hardware prices stay elevated

- Large vendors maintain strong margins

The limitation isn’t software.

It’s memory supply.

AI Scaling Is Now a Memory Problem

In the early days of AI:

- Compute limited progress

Today:

- Memory bandwidth limits model performance

- Memory capacity limits context windows

- Power limits everything else

This shift explains why techniques once considered “old” are back:

- Quantization

- Pruning

- Sparse models

- Efficient architectures

Simply making models bigger is no longer enough.

Expert Perspective: Architecture Over Algorithms

Hardware researchers and AI system architects increasingly agree on one thing:

The next breakthroughs will come from architecture, not just algorithms.

That means:

- Better memory hierarchies

- Smarter data movement

- Hardware–software co-design

- AI models built around physical constraints

In other words, intelligence is now constrained by electrons, distance, and heat.

Why Users Should Care (Even If You Never Touch a GPU)

HBM affects more than data centers.

It indirectly controls:

- AI availability

- Pricing

- Latency

- Who can build and deploy large models

If HBM is scarce, AI becomes expensive.

If AI is expensive, access concentrates.

HBM quietly shapes who controls AI at scale.

The Economics of Memory-Driven AI

AI costs are not just about GPUs.

They include:

- Memory availability

- Packaging complexity

- Power delivery

- Cooling requirements

HBM sits at the center of all of this.

As long as memory remains the bottleneck, AI expansion will remain uneven — favoring those with access to advanced hardware supply chains.

Final Thought

AI is not limited by ideas.

It is limited by physics.

HBM is the quiet enabler — and silent gatekeeper — of modern artificial intelligence.

The future of intelligence may look digital, abstract, and software-defined.

But it is built on very real constraints: electrons, distance, heat, and bandwidth.

Right now, memory is the battlefield.

And whoever controls it controls how far AI can go.

FAQ

A1: HBM (High Bandwidth Memory) is a memory technology designed to move very large amounts of data at once, making it ideal for AI and high-performance computing workloads.

A2: AI models constantly move data between memory and processors. HBM provides the bandwidth and efficiency needed to keep GPUs working without waiting for data.

A3: Unlike DDR4 or DDR5 RAM, HBM is stacked vertically and placed close to the processor, allowing much higher bandwidth with lower power consumption.

A4: GPUs process thousands of operations in parallel. HBM feeds data fast enough to prevent expensive GPUs from sitting idle during AI training and inference.

A5: HBM uses complex 3D stacking and advanced packaging techniques. These increase manufacturing difficulty, reduce yields, and raise production costs.

A6: Yes. As AI models grow larger and more data-intensive, memory bandwidth and efficiency will become even more critical, making HBM central to future AI hardware.