Artificial intelligence feels almost magical — until you realize it runs on something very real: your data.

Every prompt you type, every correction you make, every hesitation, scroll, and interaction becomes a signal. Individually, those signals feel insignificant. At scale, they form behavioral patterns that AI systems learn from.

The problem isn’t that AI uses data.

The real problem is that most users don’t understand how, why, or to what extent that data is used.

And in an era where AI is embedded into daily tools, that gap in understanding matters more than ever.

Data Is Not Fuel — It’s Behavior

You’ve probably heard the phrase “data is the fuel of AI.”

It sounds neat, but it oversimplifies something far more important.

Fuel powers a machine.

Data shapes how the machine behaves.

In modern AI systems, especially generative models, data determines:

- What the system considers normal or acceptable

- Which answers sound confident or uncertain

- What patterns get reinforced repeatedly

- Which biases are amplified — and which are reduced

This is why AI doesn’t simply “know” things.

It learns tendencies.

Opinion:

AI doesn’t reflect humanity.

It reflects the data we allowed it to observe.

You can already see this effect in how AI quietly adapts inside apps you use every day. These systems evolve based on usage, not instructions. We break that down in detail here:

👉 How AI works in everyday apps

Why This Matters More Than Users Realize

Most people think of data as something static: names, emails, files, photos.

AI treats data differently.

To an AI system, behavior is data.

- How long you pause before responding

- Which option you select when given choices

- How often you correct suggestions

- When you stop engaging

All of this feeds learning loops.

That means even when you’re not sharing personal information, you’re still shaping how the system understands users like you.

What Data AI Systems Actually Use

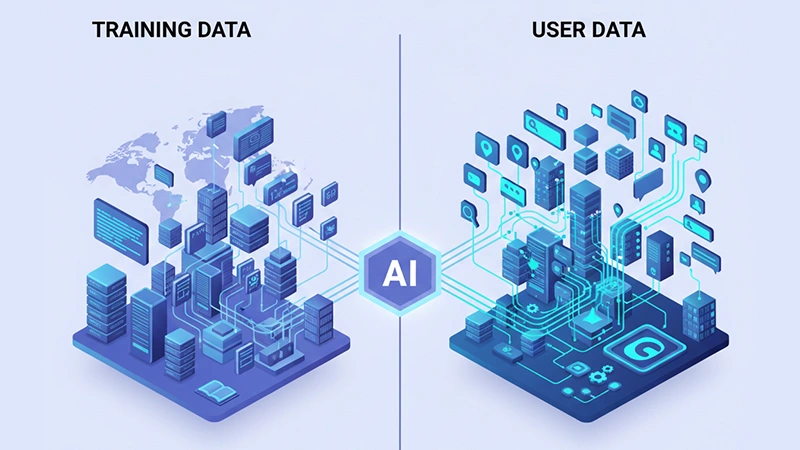

There’s a critical distinction most users miss:

training data and user data are not the same thing.

Understanding this difference removes much of the confusion around AI privacy.

1. Training Data (Pre-Training)

This stage happens before any user ever opens the app.

Large AI models are trained on massive datasets that may include:

- Public web content

- Books and articles

- Open-source code repositories

- Licensed datasets

- Human-generated examples

This process takes years and requires enormous computational resources.

Once trained, models don’t store documents or remember exact sources. Instead, they encode statistical relationships — how language flows, how concepts connect, and how probabilities stack.

This is also why modern computing is shifting toward on-device AI processing, reducing reliance on cloud servers. AI PCs are designed specifically for this transition:

👉 AI PCs Explained

2. User Data (Post-Training)

This is where users should pay closer attention.

Depending on platform policies, user data may be used for:

- Improving response accuracy

- Safety tuning and moderation

- Detecting misuse or abuse

- Product analytics and optimization

This can include:

- Prompts and replies

- Feedback and corrections

- Session-level context

- Metadata such as device type or region

Opinion:

If the product is free, your interaction is part of the value exchange.

That doesn’t mean exploitation — but it does mean incentives matter.

Does AI “Remember” What You Say?

Short answer: usually no — but context matters.

Most consumer AI systems:

- Use conversation context temporarily

- Do not store chats as personal memory

- May retain anonymized interactions for review

Privacy-focused or enterprise tools often add:

- Data isolation

- Disabled training on user inputs

- Custom retention controls

This is why paid AI tools exist.

Privacy requires infrastructure, governance, and cost.

The Real Risk Isn’t Surveillance — It’s Inference

Many users imagine AI risks as constant surveillance.

That’s not the core issue.

The real risk is inference.

Even without names, emails, or identifiers, AI systems can infer:

- Intent and interests

- Emotional tone

- Knowledge level

- Behavioral consistency

These inferences matter deeply in areas like:

- Marketing and personalization

- Hiring and screening systems

- Credit and risk scoring

- Political messaging

Critical insight:

You don’t need to reveal your identity for AI to profile your behavior.

This ties directly into how AI is changing software itself — not replacing apps, but reshaping how they function behind the scenes:

👉 Is AI Replacing Our Daily Apps?

Who Really Controls Your Data?

Most users accept terms without reading:

- Data retention periods

- Model improvement clauses

- Third-party processing rules

- Jurisdictional limitations

Technology companies are not villains — but they are businesses.

Their priorities are:

- Better models

- Competitive advantage

- Market leadership

Privacy exists as a constraint, not a primary objective.

Regulation Is Catching Up — Slowly

Governance is improving, but it lags innovation.

Existing frameworks include:

- GDPR (EU)

- EU AI Act

- State-level data protection laws

These rules help, but they don’t eliminate the need for user awareness.

Regulation defines boundaries.

Understanding defines leverage.

What Users Should Actually Do

No paranoia. Just informed habits.

As a user:

- Avoid sharing sensitive personal information

- Treat AI conversations as semi-public

- Use paid or enterprise tools for professional work

- Review privacy settings at least once

- Learn where opt-out controls exist

Opinion:

AI literacy is becoming the new digital literacy.

Bottom Line

AI doesn’t steal your data.

It extracts value from interaction.

That’s the exchange.

The real question isn’t whether AI uses your data.

It’s whether you understand what you’re giving — and what you’re getting back.

In a world powered by generative models, awareness isn’t optional anymore.

It’s leverage.

FAQ

A1: Most AI tools use temporary context and don’t permanently store personal chats, depending on platform policy.

A2: Yes. AI can infer intent, preferences, and behavior patterns without explicit identity data.

A3: Not unsafe, but free tools often use interactions to improve models. Paid tools usually offer stronger privacy controls.

A4: Avoid sensitive data, review privacy settings, and use privacy-focused or paid tools when data matters.