Hook: When Software Stopped Being Predictable

For decades, software came with a quiet promise.

If engineers wrote the right rules, the system would behave exactly as expected. That promise powered banks, airlines, hospitals, governments, and the modern internet itself. Software was reliable because it was controlled.

Then generative AI arrived — and quietly broke that assumption.

Not by being “better software,” but by being something fundamentally different. Suddenly, systems could respond creatively, adapt to context, and generate new content. At the same time, they could also behave inconsistently, confidently produce wrong answers, and resist simple explanations.

To understand why this shift feels so unsettling, we need to stop comparing generative AI and traditional software as versions of the same thing. They are not.

They represent two different philosophies of computing.

Problem: Why the Comparison Feels So Confusing

Most explanations frame generative AI as the next step in software evolution. That framing creates confusion.

If AI is just advanced software, why does it:

- Give different answers to the same question?

- Fail in ways that don’t look like bugs?

- Sound confident even when it’s wrong?

The confusion comes from treating AI like rule-based software, then judging it by standards it was never designed to meet.

The real difference isn’t speed, scale, or intelligence.

It’s how decisions are made.

Insight: Rules vs Patterns Change Everything

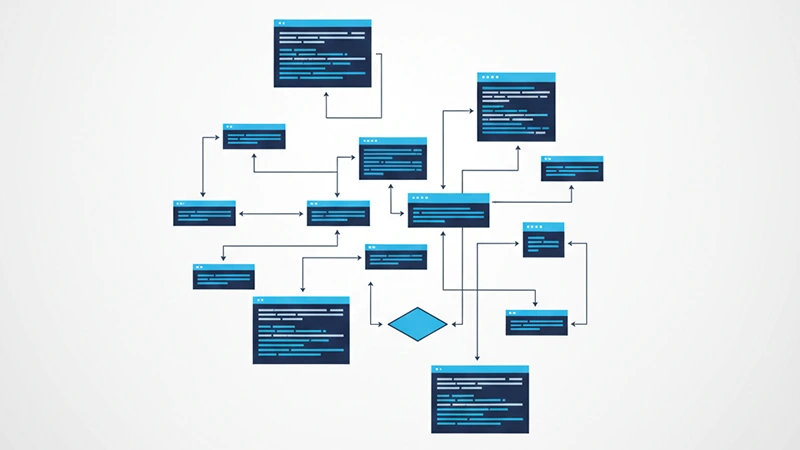

At the core, traditional software and generative AI operate on opposite assumptions.

Traditional software is rule-driven.

Generative AI is pattern-driven.

That single difference explains nearly every strength, weakness, and risk people experience with AI today.

Once you see this clearly, the rest falls into place.

Traditional Software: Certainty by Design

Classic software systems are deterministic. Engineers define exact rules, test edge cases, and lock behavior into code.

The same input produces the same output — every time.

If a banking system calculates interest incorrectly, it’s a bug.

When a medical system misclassifies a patient, that’s a clear failure.

A government database returning the wrong record means something is broken.

There is no interpretation. Only logic.

This is why traditional software remains essential in environments where:

- Accuracy is non-negotiable

- Errors must be traceable

- Accountability must be clear

Financial transactions, infrastructure systems, compliance-heavy industries, and safety-critical platforms all depend on this predictability.

That’s also why companies like SAP, Oracle, IBM, and Salesforce still rely heavily on traditional architectures at their core.

Opinion:

Traditional software didn’t become obsolete. It became invisible — because it works.

Generative AI: Intelligence Without Rules

Generative AI flips the model completely.

Instead of asking, “What rules should we write?” engineers ask, “What patterns should the system learn?”

Large language models learn statistical relationships from massive datasets. They don’t store explicit rules for correctness. They estimate what output is most likely to follow a given input.

This is why:

- The same prompt can produce different responses

- Outputs feel contextual rather than exact

- The system can sound fluent without being factual

Generative AI doesn’t “know” facts the way software enforces rules.

It predicts.

And prediction, by nature, is probabilistic.

Critical insight:

Generative AI doesn’t understand reality.

It understands likelihood.

Why This Difference Matters More Than It Seems

This shift changes how systems are built, trusted, and governed.

With traditional software:

- Behavior is auditable

- Errors are traceable

- Responsibility is well defined

With generative AI:

- Behavior emerges from training

- Errors are probabilistic

- Accountability becomes blurry

This is why no serious organization replaces traditional systems with AI alone.

Even companies building advanced AI still rely on classical software for:

- Authentication

- Billing

- Security

- Data pipelines

- Infrastructure orchestration

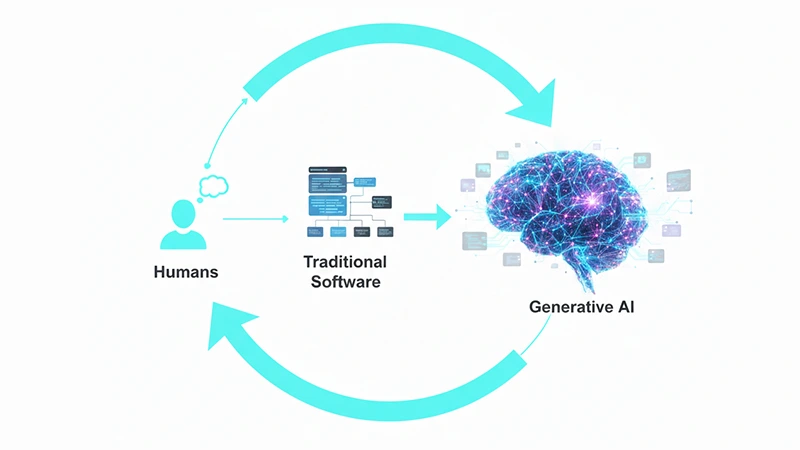

AI acts as the cognitive layer — not the structural foundation.

You can think of it this way:

Traditional software is the skeleton.

AI is the nervous system.

One without the other doesn’t work.

Data Replaces Code as the Core Asset

In traditional development, value lives in source code.

In generative AI systems, value shifts elsewhere:

- Training data quality

- Fine-tuning strategies

- Prompt design

- Feedback loops

This explains why AI leaders invest heavily in data infrastructure rather than just model architecture.

Code can be rewritten.

High-quality data cannot be easily replaced.

That shift has long-term implications for competition, regulation, and trust — especially as AI-generated outputs influence real-world decisions.

Creativity vs Reliability Is a Trade-Off, Not a Flaw

Generative AI excels where traditional software struggles:

- Natural language understanding

- Content creation

- Contextual reasoning

- Rapid ideation and prototyping

However, it struggles where traditional software shines:

- Exact calculations

- Legal guarantees

- Deterministic workflows

- Strict compliance requirements

This isn’t a weakness. It’s a boundary.

Problems arise when organizations ignore that boundary and treat AI as a replacement instead of a complement.

The most effective systems don’t choose between creativity and reliability. They combine them.

Expert Perspective: Why Engineering Still Matters

Andrew Ng has repeatedly emphasized a simple truth:

“AI projects succeed not because of the model, but because of solid engineering around it.”

In practice, this means:

- Guardrails matter more than raw intelligence

- Monitoring matters more than demos

- Integration matters more than novelty

AI without good software engineering creates chaos.

Software without AI increasingly feels limited.

Progress happens in the overlap.

The Real Future: Hybrid Intelligence

The most resilient systems follow a clear division of labor:

- Traditional software handles control and enforcement

- AI handles interpretation and generation

- Humans handle judgment and responsibility

This hybrid approach scales better, fails more gracefully, and earns trust over time.

Organizations that understand this build systems that feel powerful and reliable.

Those that don’t often chase impressive demos — and quietly burn budgets.

Bottom Line: A Philosophical Shift, Not a Technical One

Generative AI didn’t replace software engineering.

It exposed its limits — and expanded its reach.

The real difference between generative AI and traditional software isn’t technical.

It’s philosophical.

Traditional software promises certainty.

Generative AI offers capability.

The future doesn’t ask you to choose one.

It demands that you understand both — and know when each one belongs.

FAQ

A1: Traditional software follows strict rules and predictable logic, while generative AI is pattern-driven, generating responses based on learned probabilities.

A2: No. Generative AI complements traditional software, providing intelligent suggestions and content, but it relies on traditional systems for control, security, and infrastructure.

A3: AI predicts what comes next based on patterns, not facts. Its responses are probabilistic, so mistakes are natural even when it appears confident.

A4: In generative AI, the quality of training data, fine-tuning, and feedback loops determines output accuracy. Code alone cannot replace high-quality data.

A5: Hybrid intelligence works best: traditional software ensures reliability, AI adds interpretation, and humans provide judgment to make systems powerful, scalable, and trustworthy.